Probabilistic & Causal Models for Responsible AI

Background

Deep learning models have achieved high performance across different domains, such as medical decision-making, autonomous vehicles, decision support systems, among many others. However, despite this success, the inner mechanisms of these models are opaque because their internal representations are too complex for a human to understand. This opacity makes it hard to understand the how or the why of the predictions of deep learning models.

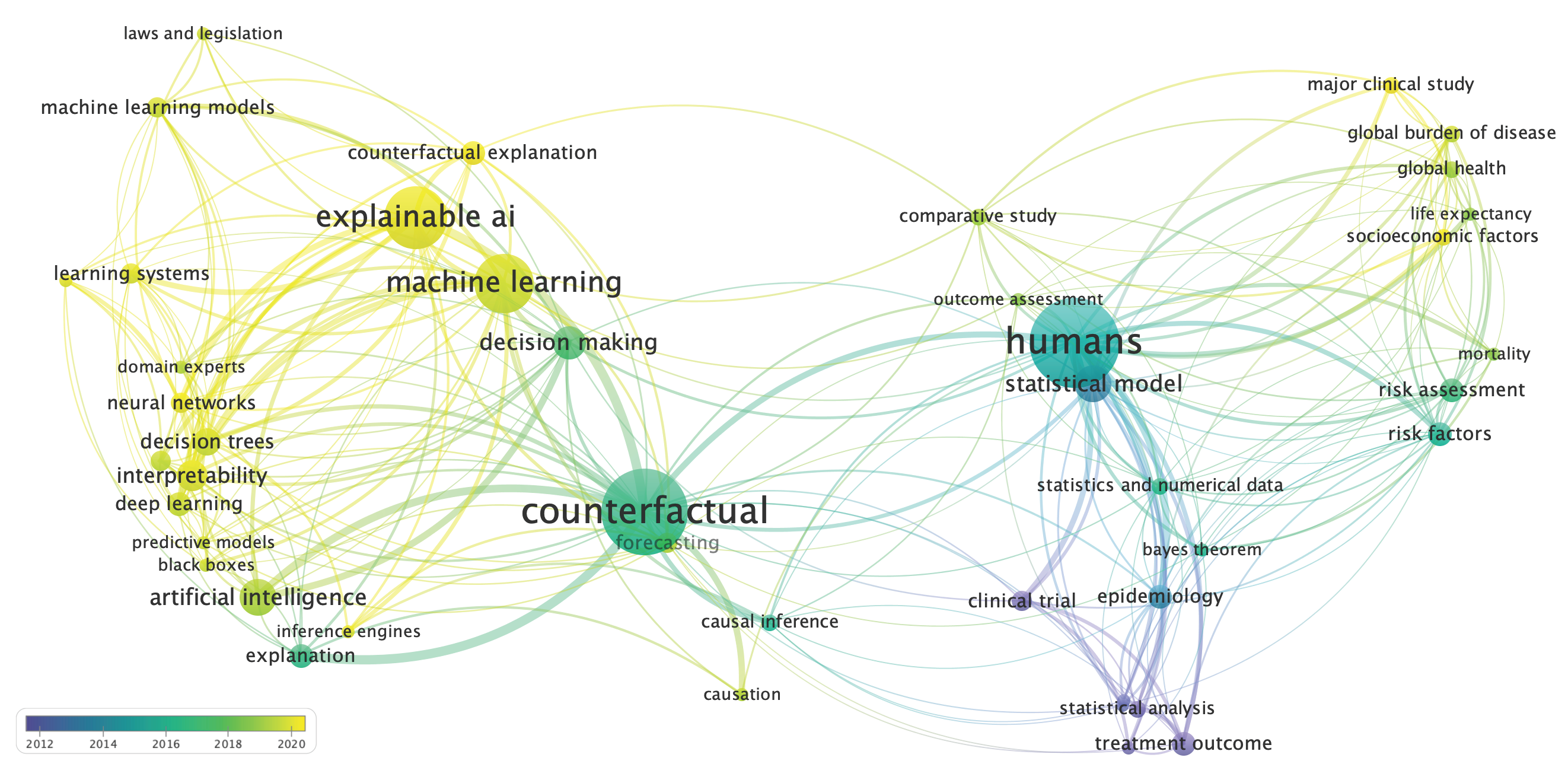

Recently, there has been a growing interest in the literature in "what-if" explanations which are called counterfactuals. An example of such explanations is the following: "My loan application got rejected. What would I have to change in my application to make my load accepted?". Counterfactuals in XAI are currently the bridge between traditional machine-based statistical analysis, human risk factor models, and explainable artificial intelligence.

Research Topics

This research theme aims to make machine learning models understandable to human decision makers by providing human-centric counterfactual explanations of their inner workings. It builds on the hypothesis that causality is a crucial missing ingredient for opening the black box to render it understandable to human decision makers through counterfactual "what-if" explanations. Current explainable algorithms in the literature are based on correlations and sensitive to sampling bias, which can make the explanations more biased than the black-box itself. In this research theme, we argue that to achieve explainability, causality is a necessary condition to achieve human understandable explanations. The topics covered in this research theme include:

- Build counterfactual algorithms in XAI

- Develop causal inference methods for XAI from observational data

- Novel probabilistic interpretable algorithms for XAI

- Develop standardized evaluation protocols for counterfactuals in XAI